Effect of the batch size with the BIG model. All trained on a single GPU. | Download Scientific Diagram

Layer-Centric Memory Reuse and Data Migration for Extreme-Scale Deep Learning on Many-Core Architectures

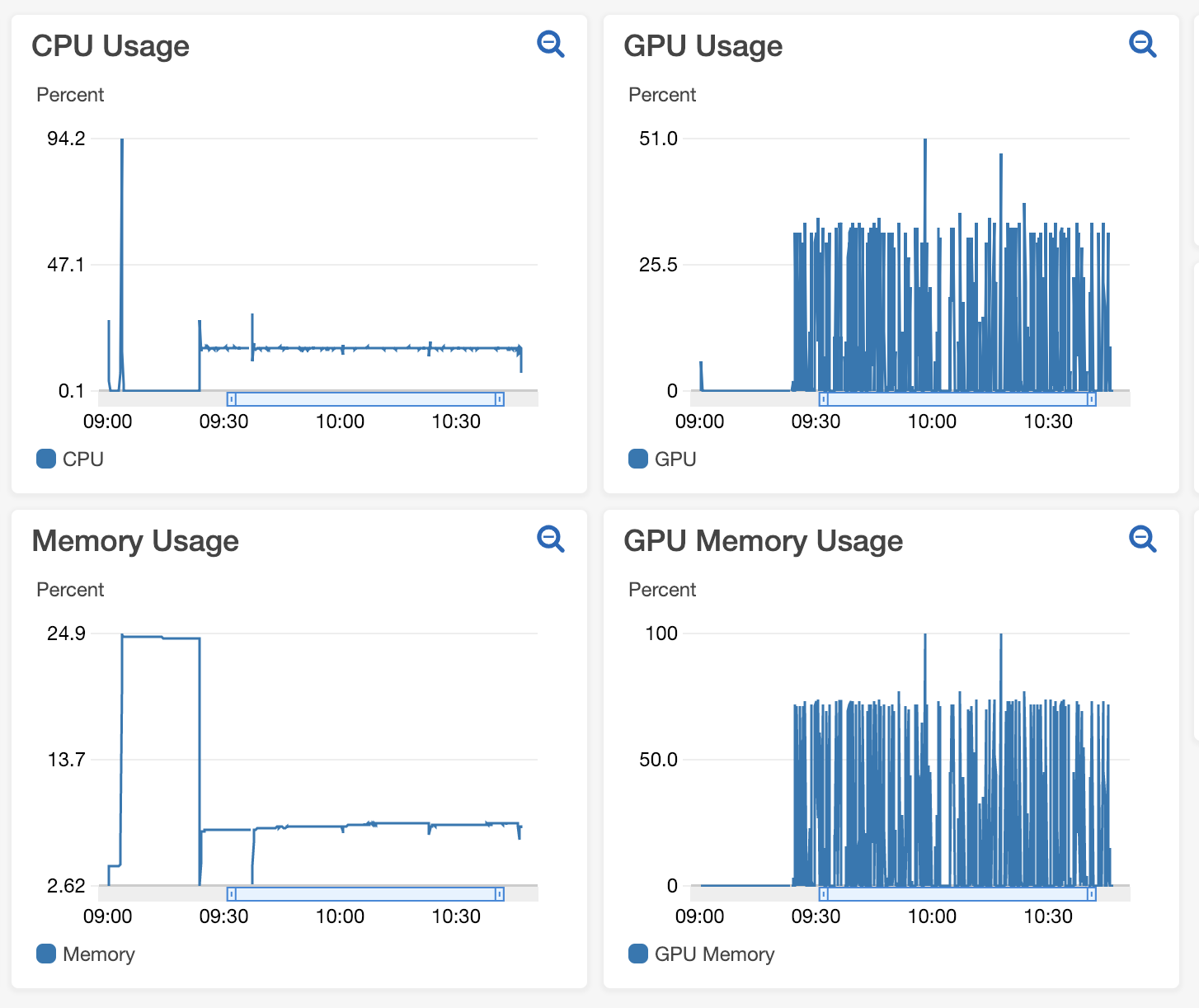

OpenShift dashboards | GPU-Accelerated Machine Learning with OpenShift Container Platform | Dell Technologies Info Hub

GPU memory usage as a function of batch size at inference time [2D,... | Download Scientific Diagram

GPU Memory Size and Deep Learning Performance (batch size) 12GB vs 32GB -- 1080Ti vs Titan V vs GV100

GPU memory usage as a function of batch size at inference time [2D,... | Download Scientific Diagram

GPU memory usage as a function of batch size at inference time [2D,... | Download Scientific Diagram

pytorch - Why tensorflow GPU memory usage decreasing when I increasing the batch size? - Stack Overflow